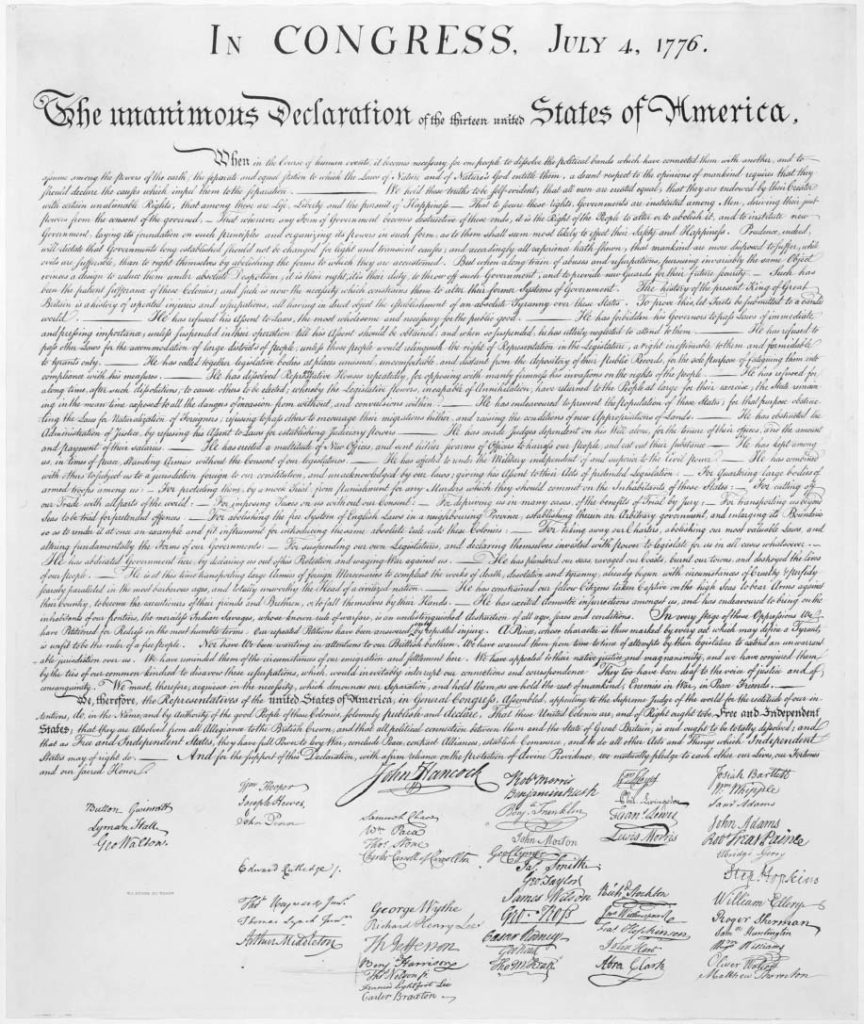

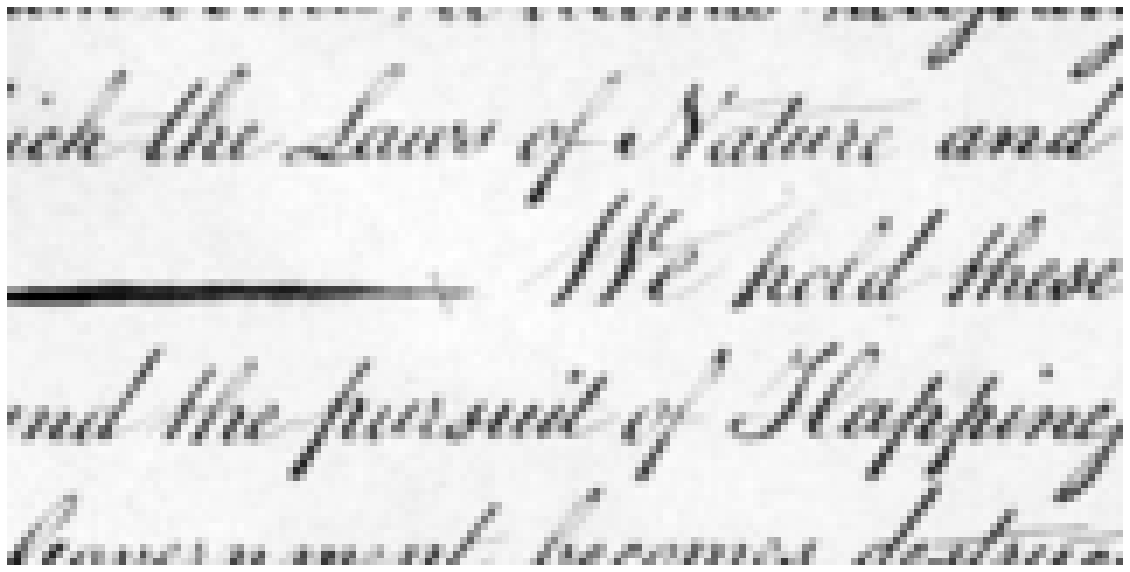

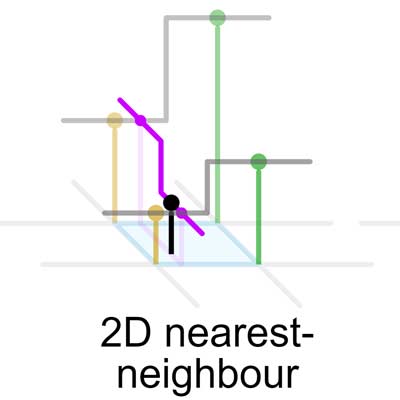

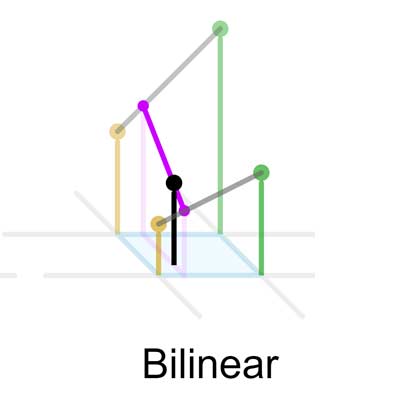

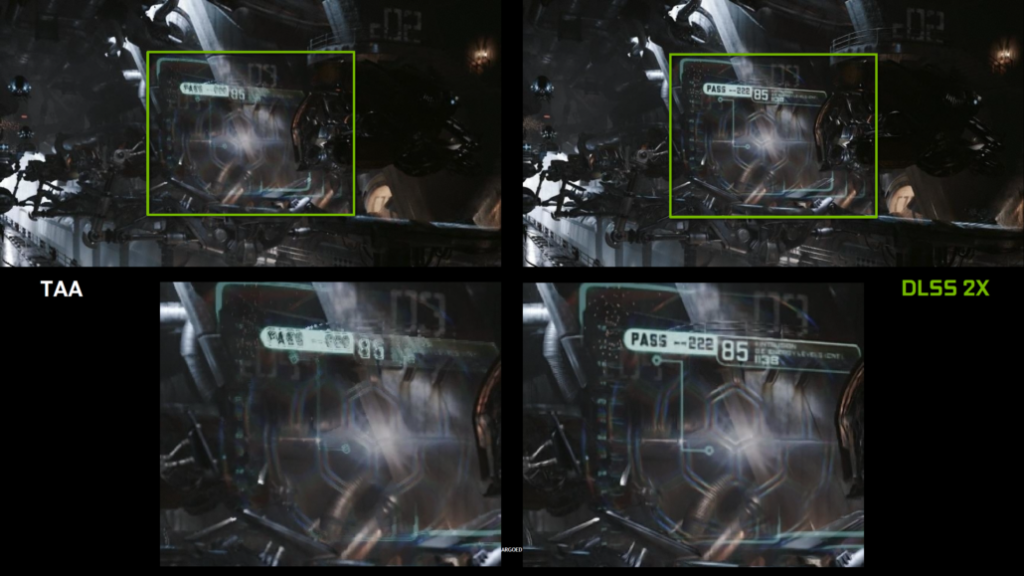

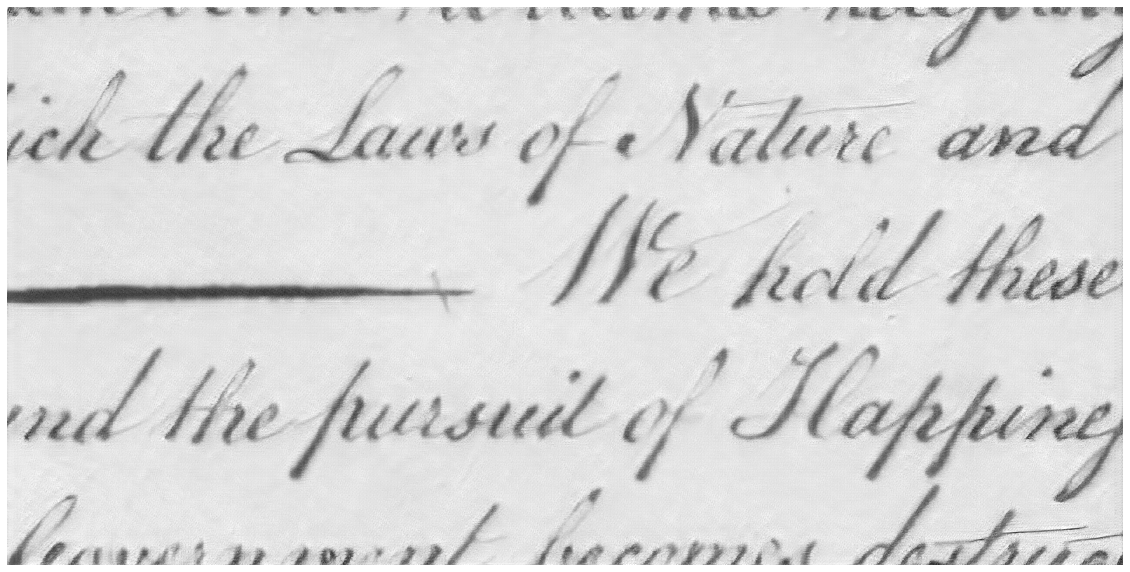

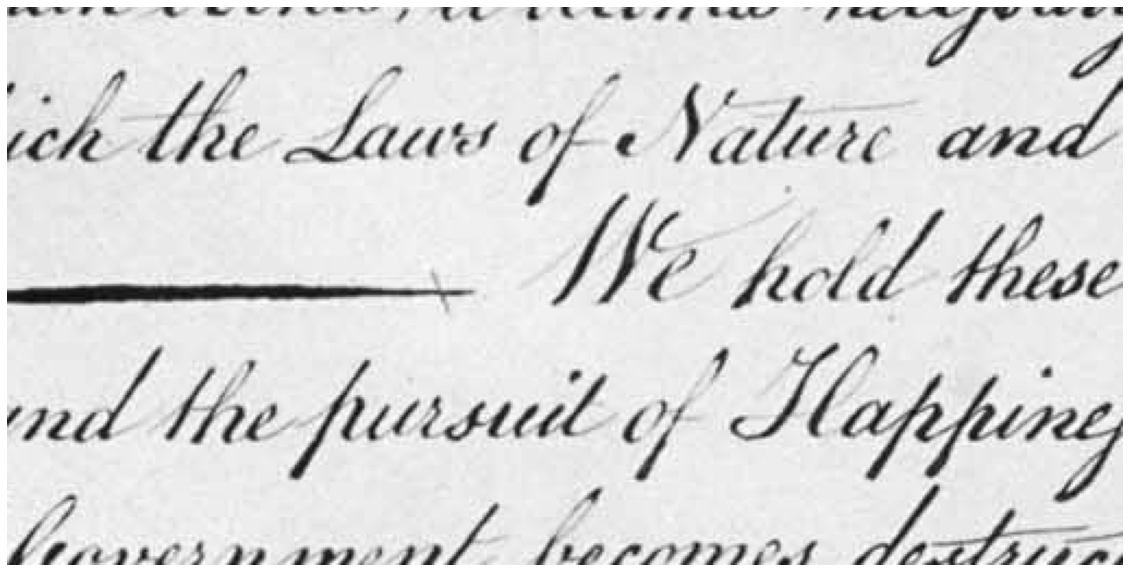

Unfortunately, the process of upscaling real time computer graphics has certain advantages. For example, one usually has several images in a sequence that can be used to extract additional information that may be lost in individual images. One can also use additional information provided by the rendering engine, like motion vectors or even object stencils. When dealing with scanned pages of old documents, we have none of these things. We only have one image, and we have to “imagine” any kind of extra information. Fortunately, this is an area where AI has excelled as well. This particular sub field has made use of so called Generative Adversarial Networks, and while they are not yet really used in production level environments, they do show remarkable potential. They work by employing two separate neural networks: A generator and a discriminator. In the most common use case, the generator creates new images, while the discriminator tries to spot fake images among real ones from a given training dataset. The training process is a zero sum game where one network gets better at faking images while the other one gets better at identifying fakes. When trained long enough, GANs have been shown to produce photorealistic results. If we would like to create completely new images, we would essentially feed random data to the generator as inputs. This is very interesting for artists or content creators, but we actually want to improve existing images. To do that, we need a slightly modified setup, for which we took a closer look at the architecture described in this paper: Photo-Realistic Single Image Super-Resolution Using a Generative AdversarialNetwork. The details are a bit too involved for this post, but the results speak for themselves.