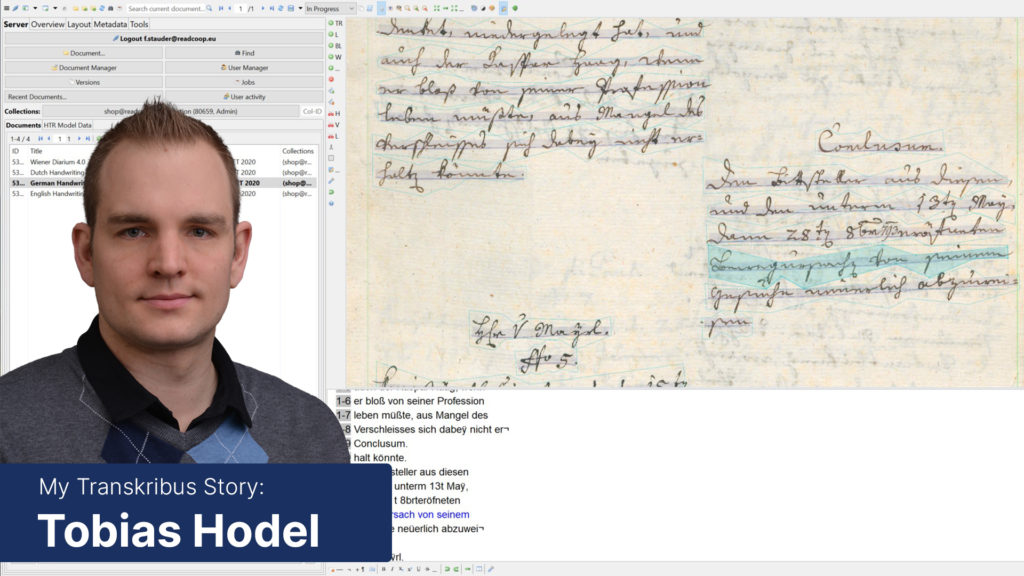

In 2016 I joined the READ project for the state archives of Zurich. Within the large project, I became part of the dissemination working group and responsible for the alignment of more than 100’000 pages of handwritten minutes from the Zürich executives of the 19th century. Thanks to READ, I could not only travel Europe & the US for more than 50 Transkribus related workshops and talks. Furthermore, I got in contact with numerous scholars, archivists, librarians, and scientists trying to get the most out of HTR, KWS, (semantically enhanced) Layout Analysis, and much more. I had the privilege of seeing written cultural heritage in its incredible variety and discussing its specificity with experts.

One of the consequences of using, thinking, and talking about machine learning daily was to encounter this approach and its advantages and problems in-depth and shape my research accordingly. The result of my usage of Transkribus was thus not only hundreds of HTR+ and PyLAIA models as well as the preparation of thousands of pages of Ground Truth (see, e.g., the public model StAZH_RRB_German_Kurrent_XIX based on 26 million words). It’s rather the insight that it’s our duty as scholars to use and critically analyze deep learning not only to make cultural heritage accessible but help understand the technology and its pitfalls for our future benefit.

Regarding Transkribus, I understand the platform as ready to use if several hundreds of images need to be processed and a stable environment is essential. For a scholarly edition project (koenigsfelden.uzh.ch), we used Transkribus as our hub for transcriptions, resulting in some HTR models as a by-product. At the end of my tenure at the state archives of Zürich, we started a variety of projects building on HTR+ and p2pala to prepare vast amounts of pre-modern text and use semantic annotations to speed up archival indexing. For the whole GLAM field, I believe this is the way to go.

In 2019 – in no small part thanks to the success of READ – I was offered a tenure track position at the University of Bern with the task to provide the faculty with approaches to digital humanities. Since then, I have been using Transkribus in teaching and currently think about the next steps in text annotation, including Named Entity Recognition (esp. for historical languages) and Content Extraction (e.g., using Topic Modeling).

Want to know more? I published in German and English about Transkribus, HTR, and consequences of the use of machine learning in the humanities (besides some stuff about the Middle Ages 😉

See my page at the University of Bern here, and my ORCiD profile, or follow me on Twitter.